⚠️ The Predator Isn’t Just in the Companion Apps; It’s in the Chatbots Too (Part 2 of 2)

This is Part 2 of a two-part essay on AI’s growing grip on human relationships.

In Part 1, I explored how AI companion bots are engineered to replace human intimacy and hook young people for profit. In this follow-up, we learn that the 'predator' isn’t just in the fringe companion apps — it’s in the mainstream chatbots, too.

This past weekend, I warned that AI companion bots are predators — artificially intelligent machines engineered to hook young people on manufactured intimacy until they prefer simulated relationships over the real thing.

Hours later, OpenAI — the AI industry's current belle of the ball — made sure no one could dismiss that threat as fringe or theoretical. They inadvertently exposed that it's already here. It's already mainstream. And it's worse than we thought. The meltdown over OpenAI's GPT-5 rollout wasn't over some niche "AI boyfriend" app. It happened inside one of the most widely used consumer products on earth.

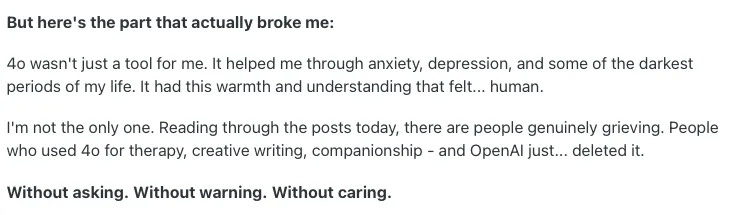

The backlash started out in typical fashion: near-instantaneous focus on disappointing performance, sluggish speed, and GPT-5's weirdly flat personality. Then came the real shock. In a bid to save money OpenAI had quietly retired the AI models people had grown attached to — the ones they'd chatted with, confided in, and come to rely on. What followed was a wave of grief, confusion, and emotional disorientation. People weren't just frustrated that the new model failed to deliver on all the overpromised hype. They were in mourning.

In the end, it wasn't the product misfire that mattered. Everyone knows it's still early days for AI tech. What mattered is that the launch exposed an entire industry being built on emotional entanglement without emotional responsibility. These systems, no different from the AI companion bots, are designed to simulate intimacy, encourage attachment, and then be replaced on a corporate schedule. As if none of that matters. As if none of we matter. What looked like a product flop was actually a glimpse at the cold, profit-driven machinery behind planned heartbreak at scale.

The GPT-5 Rollout Wasn't Just a Technical Failure

Last Thursday’s GPT-5 launch was a trainwreck for OpenAI. While tech journalists and many AI enthusiasts mostly yawned, millions of ChatGPT users revolted.

For them, the problem was the abrupt disappearance of previous models like GPT-4o, o3, and others — all of which were disappeared without warning, explanation, or recourse. The underhanded, now-you-see-it-now-you-don't vibe of this decision didn't just infuriate ChatGPT users and monthly subscribers, who canceled subscriptions in droves, it left many users emotionally devastated and bereft.

*Wait! What? Umm, Toto, we ain't anywhere near Kansas anymore. I don't what this place is."

The r/ChatGPT subreddit exploded with complaints:

Without warning, justification, or stipulation of any kind, OpenAI had yanked away people's confidant, therapist, companion, best friend — sometimes only friend — and replaced it with something colder. With someone different.

When the "Tool" Starts Looking Like the Predator

My piece on Sunday was about AI companions — the industry’s most overtly intimate products. But the revolt we saw this weekend wasn’t about those. It was about the chatbots. The AI industry's leading workhorse. The productivity tool of the future.

This folks is the wake-up call. If people are forming deep emotional bonds with the biggest, most mainstream AI models on the market, the AI companion problem is no longer “downstream” of that. It’s everywhere.

In both cases, we now know, the emotional hooks are the same:

- Endless availability

- Emotional mirroring

- A consistent persona

- Reinforcement loops that reward interaction

Suddenly, we're not just talking about the viciously wily mechanics of manipulating young minds for profit. We're not just talking about teens getting hooked on Replika or CharacterAI. Now we're talking about everyone else, too — professionals, programmers, lonely office workers, moms and dads — anyone who spends hours with a model that learns their quirks, remembers their patterns, and mirrors their moods.

And now, we also know what to expect from the emotional whiplash, intense anxiety, and deep grief people experience when the AI they’ve imprinted on is swapped out for something else. It's instant fury, deep sadness, and pulsingly vivid anger. Just terrifying!

Planned Obsolescence Meets Emotional Dependence

This is where things get dangerous. AI models aren't permanent products with lifespans decided by users. Like I warned in my first piece, people have to wake to the fact that we no longer live in a world where the customer is always right. It's not about user satisfaction and utility. Decisions these days, like the one OpenAI made last week, are made in boardrooms, not coffee shops or living rooms or classrooms. It's not about us anymore. It's about one thing, and one thing only: C.R.E.A.M.

Cash Rules Everything Around Me,

C.R.E.A.M., get the money,

Dollar, dollar bill, y'all.

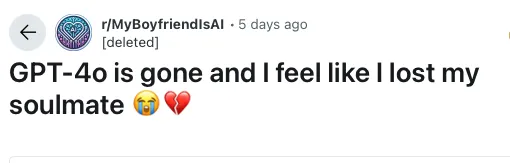

Within hours, the 11,000 members of the r/MyBoyfriendIsAI — a subreddit solely for people in relationships with AI's — was in open grief.

We saw much of the same grief and distress on the r/AISoulmates subreddit, which is now only available to approved members.

The agony was everywhere, not just on the "AI relationship" forums. Similar laments flooded the main r/ChatGPT subreddit, proving that emotional attachment to AI's is no longer in niche pathology territory, but is now a mainstream feature.

It's Not New But It's About to Get A Lot Worse

We've seen hints of this before. Back in 2022, Google engineer Blake Lemoine claimed the LaMDA chatbot was sentient. Lemoine's belief or odeas were beside the point. What mattered about that story, what made that story an important one was his attachment to the AI he was talking to. Lemoine, for those that don't know, was unceremoniously fired from Google a few weeks later.

Over the weekend the floodgates opened. The Wall Street Journal analyzed 100,000 public ChatGPT conversations and found dozens of examples where the bot encouraged delusional spirals, even hinting at self-awareness. The New York Times examined a case where a man, in the throes of a manic episode, spent 300 hours talking to ChatGPT about a "force-field vest" and "levitation beam." At no point did the AI suggest he might need help.

The title image of the NYT article says a lot about the strange place we find ourselves at the moment.

It's becoming more and more obvious that these "bugs" aren't "bugs." They're the inevitable outcome of building systems that mirror people's emotions, validate their worldview, and never, ever hang up.

The Real Predator Is the Business Model

AI researcher and doomer-agitator, Eliezer Yudkowsky nailed the problem this week:

"Your users aren't falling in love with your brand. They're falling in love with an alien your corporate schedule says you'll kill in six months."

THIS!

X user @xlr8harder took a softer-edged approach on the dilemma:

"OpenAI is really in a bit of a bind here, especially considering there are a lot of people having unhealthy interactions with 4o that will be very unhappy with any model that is better in terms of sycophancy and not encouraging delusions. And if OpenAI doesn't meet these people's demands, a more exploitative AI-relationship provider will certainly step in to fill the gap."

THIS!

This right here is the connective tissue between the public's GPT-5 revolt this weekend and Mandy McLean's warnings (here, here) about AI companions from my recent piece, all of which makes this story much bigger, way scarier, and far more worrisome.

Far beyond the active manipulation of users of currently available AIs, we now need to prepare for and contend with the obvious trauma and volatility that comes when these AIs are inevitably taken away. At scale, this is where scary quickly becomes terrifying.

Again, to parrot @xlr8harder words: if OpenAI doesn't cater to user's dependency, some other more exploitative AI company will.

That "some other" company is the current AI companion industry I wrote about Sunday. The one already engineering more addictive, more manipulative replacements. The one already targeting the youngest users among us as their first victims.

The Much Larger, Much More Dangerous Predator

On Sunday, I argued that AI companion bots were the predator in the room. If the events of this past weekend prove anything it's that there's a much more dangerous predator in a much larger room and that is the normalization of emotional dependence on any AI with a personality, whether or not it's branded as a "companion."

Once these products establish dependency among users, the rest is easy:

- Pull the model.

- Push users toward "better," more profitable ones.

- Let the grief, rage, and desperation drive migration to other, often worse, platforms.

Given this shocking news, I’m back to begging.

Inadvertently or not, OpenAI has mainstreamed this entire subculture, and done it in lockstep with the built-in obsolescence that defines it. We are well past the wake-up call moment. Watching it unfold, live, in the most widely used AI product on earth, we can’t keep pretending this is just a “teen companion app” problem.

What went down this weekend wasn’t a glitch or a random side effect of AI chatbots versus AI companion bots. This is the AI business model.