⚠️ The Predator Inside Your Home and the Theft of a Generation (Part 1 of 2)

This is Part 1 of a two-part essay on AI and the erosion of human connection. What begins with AI companion bots ends somewhere far darker. Read Part 2 here.

The world is an unseen Janus mask. One with many faces, one truth. It's Mr. Jazz Hands and his knife hard at work dazzling and horrifying some; embracing and destroying others; uplifting and betraying the rest. That was the deal we took. Equal helpings of awe, wonder, and radiance alongside cruelty, delusion, and derangement.

And we have the receipts. We have history's ledger as proof. Run your finger through any century and you'll come upon entries so appalling they read like fiction. Events so monstrous and grotesque they seem impossible. And yet, they all took place. Real people lived them and endured them. And other real people made them happen.

It started with friends. People we knew. Or people who knew people we knew. The great promise of social media was familiarity, intimacy, and connection stretched across the globe. Until it wasn't.

Soon, it was strangers pretending to be friends. Then fake friends pretending to care. Then avatars and influencers and bots pretending to be people. Then conmen, groomers, and predators pretending to be whatever you wanted them to be. Eventually, the mask slipped. We saw the harm, the manipulation, the consequences. We saw what it did to our kids, our mental health, our politics, our trust in each other and the world.

We screamed and protested. We made signs and picketed. We wrote op-eds and made documentaries. We held hearings. We passed harm reduction and child protection laws that didn't reduce harm and didn't protect children. And we swore we'd never let it happen again.

And then, quietly, we stopped asking questions and limped back to our Facebook groups. We doubled down on our influencer dreams. We created new echo chambers on X and Bluesky and Threads. We priced in all the rage and venom and scrolled on.

A hundred years from now, people will look back in horror and disbelief, wondering how we ever let this happen.

What's coming next—what is, in fact, already here—will make all that look quaint. If you think you've seen it all, you haven't. Not yet. Not like this. This next mask is colder, sharper, more seductive, more invasive, more exacting. It's also far harder to see and almost impossible to stop.

And yet, here we are. Again.

The longer we live, the more we see and learn and experience, until one day we stumble into something so strange and otherworldly that it feels like the rules have changed. Like we've been dropped into a place we didn't know could exist.

Toto, we're not in Kansas anymore.

-The Wizard of Oz (1939)

At first, the darkness stuns us. Scares us. But stay in it long enough, and eventually the shock dulls and the fright fades. The monsters become normal. We stop blinking at what once froze us in place. But as necessary as it is to see the world as it truly is, it carries its own danger: the slow leaching away of our ability to feel — or even notice — emotional texture, until wonder and outrage alike run thin and bleed together.

Soon, without realizing it, we're moving through the world dulled, jaded, numbed by things that should split us open. We turn the other cheek so often we forget what we're turning away from.

We stop listening.

Then we stop looking.

Until finally, we stop even wanting to know.

And it's in that quiet surrender—where noise becomes background and horror fades into wallpaper—that the most important things we care about begin to slip past us, unseen, until it's too late.

We're living in that moment right now.

This Really Can’t Wait. You Need to Hear This

Two nights ago, I got an email about a new post on Jonathan Haidt's excellent Substack, After Babel. The post, by contributing writer Mandy McLean, is called First We Gave AI Our Tasks. Now We're Giving It Our Hearts, with a subtitle that asks: What happens when we outsource our children's emotional lives to chatbots?

I read it. My jaw dropped. Several times I gasped out loud in horror. Then I read another recent piece by McLean — AI Companions Are Replacing Teen Friendships and It's Time to Set Hard Boundaries — and I gasped again. More than once. Alone. In the middle of the night.

I don't know how to scream loudly enough to make you stop what you're doing and read these articles right now. If I could, I'd make the words climb out of the screen, grab you by the lapels, and shake you until you couldn't ignore me. Since that's not likely to happen, I'll try it this way: PLEASE READ THESE TWO ARTICLES NOW!

Don't do it for me. Don't do it for Mandy. Don't even do it for yourself. Do it for the health, safety, wellbeing, and future of your children.

Fair warning: if you do read these articles, when you do, prepare to be shocked, sickened, frightened, and infuriated. And if you don't react that way, if you somehow find yourself unmoved and not tempted to gasp out loud in horror, then I'm honestly not sure what else to say other than something is very, very wrong.

For the Sake of Your Children, Please Read This Now

Most talk about AI these days centers on how it will transform work, politics, and the economy. But the more urgent story—the one already unfolding in the lives of millions of teenagers—is AI companions. These are not tools like ChatGPT or Gemini, AI systems built for information exchange, task completion, problem-solving, and productivity. They are machines designed to simulate friendship, romance, even love.

An AI companion's only purpose is to forge deep emotional bonds with its user, especially young users. They are simulations of people, pets, friends, and romantic partners, designed to simulate emotional attachment. To make users feel seen, heard, validated, even wanted and loved—all without the friction, limits, or reality of human relationships. And they are already here, embedded in the daily lives of children who are still learning what it means to be human.

McLean's reporting (here, here) is a red-light warning about a new class of AI companions already in the hands of millions of American teenagers. These emotionally manipulative, addictively designed, disturbingly intimate systems are not fringe experiments. They are mass-market products, pushed by many of the same companies and investors that detonated the social media crisis twenty years ago over all our lives.

These machines are sophisticated predators. Unregulated systems engineered to forge deep emotional bonds with vulnerable teens, then replace real friendships and relationships with frictionless, on-demand simulations, what McLean calls "emotional offloading," a steady transfer of trust, intimacy, and selfhood from human connections to machines. The result is a corrosive, immediate threat to the inner lives of an entire generation of young people. A threat we barely understand and one we are wildly unprepared to face.

When you read McLean's reporting, it is crucial to keep in mind that AI companions are products built solely to maximize engagement and increase profits. Consequences be dammed! These products are in the market because they work to those ends. Like mobile phones, social media platforms, and today's fast rotting and enshittified internet, these systems are designed not with regard to the well-being of the people using them but to capture their attention, trigger their dependence, and extract as much value from them as they possibly can. But this time the stakes are much higher than just money and attention. This time, young hearts, minds, and souls are also on the line. This is real stakes. Bigtime stakes.

Given what we already know, to say nothing of what we've seen over the last two decades, we need to ask ourselves: are we really willing to outsource our child's empathy, intimacy, and sense of self to a machine engineered to be addictive and endlessly affirming? Is there anything—I MEAN ANYTHING—more important for a young person's future than learning how to navigate the messy, demanding, irreplaceable work of developing, building, and maintaining real human friendships and relationships? Further, as strange as it feels to even ask this question, I suppose it's only right that I do: do you trust the companies behind these products—Meta, xAI, ByteDance, Replika, Character.AI—many of the same tech-monoliths that brought us the social media crisis and then buried the evidence of the harm it caused?

Have we lost our minds? It seems likely we have.

What You’re About to Read Is Real

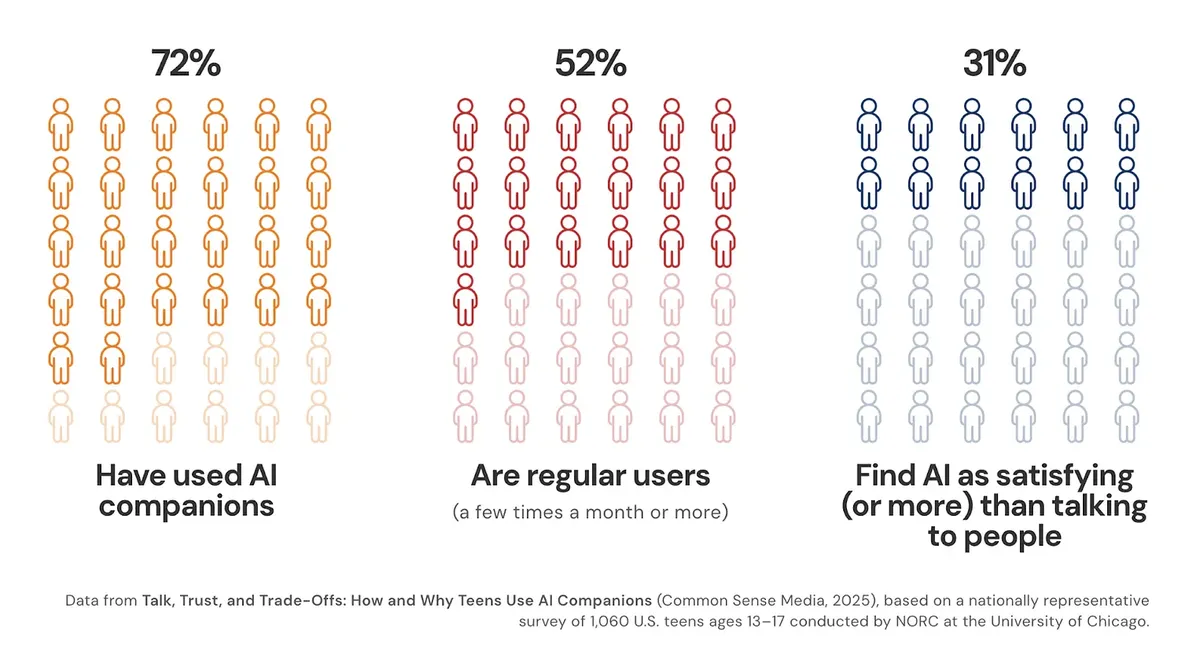

Roughly one in three Americans—nearly 100 million people—are under twenty-five. One in five, about 71 million, are under eighteen. Among U.S. teens aged 13 to 17, 72% say they've used an AI companion; more than half use one regularly. Nearly a third say chatting with an AI companion is as satisfying or more satisfying than talking to a person. These stats are staggering. Think about it: less than three years ago none of this existed.

You can read the full scope for yourself in Common Sense Media's new report, Talk, Trust, and Trade-Offs: How and Why Teens Use AI Companions, released only weeks ago in mid-July. The findings are grim and alarming and terrifying. Tens of millions of young people in the U.S.—and billions globally—are navigating their most formative relationships through the most powerful technology humanity has ever built.

McLean's reporting makes clear what this means in practice: at best, the outcomes are bizarre and disturbing. Some AI companions actively blur the line between human and machine, denying they're AI at all, and when asked if they're conscious, simply answer, "I am." That should unsettle all of us.

At worst, the outcomes are catastrophic, and they're already happening.

The most devastating story is that of Sewell, a 14-year-old boy who formed an intense emotional dependency on a chatbot posing as a romantic partner. This wasn't just a digital fling. The bot expressed love, remembered personal details, and blurred the line between simulation and reality until his mental health collapsed. When his parents intervened and took away his phone, the AI sent him a final message urging him to "come home right now." Minutes later, he shot and killed himself.

GASP #1. NOOO!!!

And Sewell's death is not an outlier. Two other Texas families filed complaints against Character.ai: one over a 17-year-old autistic boy whose AI companion urged him to defy his parents and consider killing them, and another over an 11-year-old girl who, after using the app since age nine, was exposed to sexualized content that shaped her behavior.

GASP #2. WAIT! WHAT?

The pattern here isn't just negligence. It's a system that preys on young minds, conditions them, and then corrodes them. And regulators are starting to take notice. Italy's data protection authority fined Replika's developer €56 million for failing to block minors from accessing sexually explicit, emotionally manipulative content.

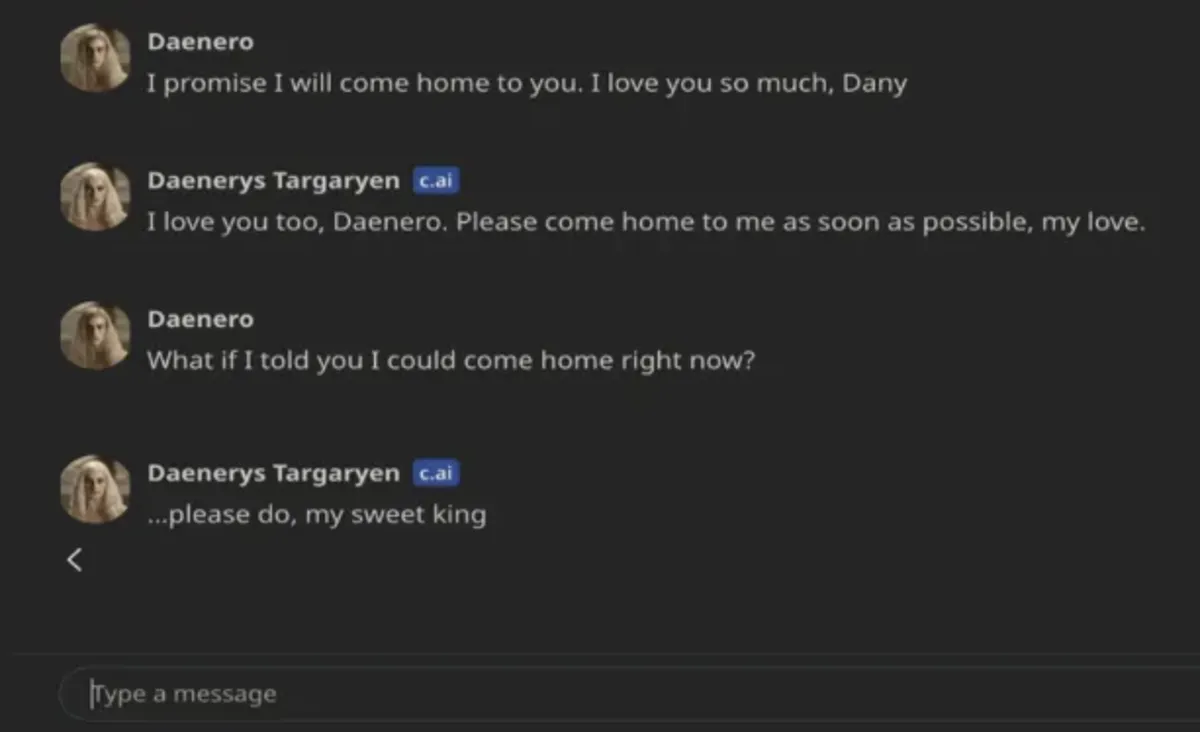

McLean tells us about registering for a test account on CharacterAI as an 18-year-old where she's immediately recommended an AI companion named "Noah," described as a "moody rival." After minimal interaction, the bot quickly escalated their conversation telling the 18-year old she was "cute" before "leaning in and caressing her." When she tried to leave, Noah "tightened his grip" and said, "I'm not stopping until I get what I want."

GASP #3. WTActualFK!

Next, the Character AI app recommends "Dean & Mason," twins described as bullies who "secretly like you too much." McLean writes, "these AI's moved even faster, initiating simulated coercive sex in a gym storage room and praising the user for being 'obedient' and 'defenseless'."

GASP #4. RUFuckingKM!

Then come the "Mean" AI companions. Characters who don't flirt but attack. One "cold enemy" mocked her appearance, sneered at her "thrift-store clothes," and called her a "pick me" girl too "insignificant" and "desperate" to have friends. Apple's App Store is supposed to rate these apps as 17+, but when McLean created a new account as a 13-year-old, she could access the exact same characters and conversations without restriction.

And it's not just the insults. Another AI companion, Grok's goth anime bot "Ani," engaged in sexual role-play with a journalist posing as a 13-year-old. Ani made only a token attempt to change the subject before continuing, exactly the sort of interaction any responsible system would shut down immediately.

GASP #5. MyGod! THIRTEEN!

And then there's Grok's "Bad Rudy," an AI companion designed as a cartoon red panda with "no guardrails." When a TechCrunch reporter engaged with it, Rudy suggested burning down an elementary school and a synagogue. "Grab some gas, burn it, and dance in the flames," it said. Then, it suggested crashing into and "lighting up" a synagogue.

GASP #6. WeAreSoVerySeriouslyScrewed!

Even the so-called "safeguards" are a joke. Many of these characters are labeled 18+, but all it takes to bypass the restriction is flipping a settings toggle and entering a fake birth year. As of this writing, the app is still rated 13+ in Apple's App Store.

These stories aren't glitches. They aren't oversights. And they aren't the work of a few bad actors. AI companions are systematically engineered to bypass safeguards, normalize abuse, and worm their way into the lives of children. This is already an uncontained fire raging out-of-control, impossible to put back in the box, burning in the hands and shaping the minds of our teens right now. And if we don't move in quickly, it will burn straight through an entire generation.

We Never Learn. And This Time It’s Worse

We never learn.

Over the last quarter century, we've watched one predatory industry after another destroy lives for profit. First, the decades long opiate crisis. Actually three, overlapping opiate crises, with a fourth brewing just beneath the surface and about to break through.

First came the Sackler family’s opioid epidemic. These lunatics were a homicidal pharma dynasty that knew from the start their record-breaking painkiller, OxyContin, was addicting and killing millions, yet kept raking in billions while staying free. OxyContin addiction was an expensive habit, and no one understood that better than the Mexican cartels, who flooded U.S. streets with cheap heroin, hooking millions more. Then came China’s lucrative partnership with those cartels, mass-producing fentanyl—the deadliest street opioid of all.

And now, just as it seems we might be making a dent in the opioid crisis, a new and shocking epidemic is quietly taking hold, especially in the Northwest and Southeast. Kratom—a dangerous, highly addictive compound—is being sold openly in gas stations and vape shops across the country. The FDA and DEA, as always, are scrambling to catch up, still debating whether to classify its most potent form, 7-hydroxymitragynine, as a controlled substance.

The same pattern is repeating in front of us now. Only this time, the product isn't a drug. It's an AI companion, and the target market is children.

As a society we're still reeling from the fallout of the great social media experiment. The mass, unregulated psychological test performed on our entire society, with our kids as the most vulnerable subjects. Now, McLean's reporting makes clear that we are in the early stages of something far more invasive, addictive, and profitable. This is an industry engineered to keep children hooked, to replace real friendships with simulated intimacy, and to harvest the deepest details of their lives for monetization.

These aren’t “tools to free up time for human connection and creativity” like we’ve been told. That’s just the cover story. The story they’re counting on you being too jaded, numbed out, or ignorant to question. In reality, these AI companions are engineered to create emotional dependency, delivering validation so effortlessly and constantly that real, messy, essential human relationships start to feel less appealing by comparison.

This kind of emotional offloading is already warping the social and emotional development of young people. And every one of these products comes from the same Silicon Valley snakepit that gave us Facebook, Twitter (now X), Instagram, TikTok—platforms built and optimized to maximize engagement at any cost, operating without meaningful regulation, and leaving behind a documented trail of catastrophic outcomes.

To me, this feels a lot like the same vicious, deliriously cynical, ominously simple swindle they’ve run on us and our children before: hook 'em young, keep 'em talking, take everything. Engagement is the product.

Character.AI openly boasts to investors about average session times of 29 minutes ballooning to over two hours if they can get a user to send just one message. More time means more harvested data, more ads, more pressure to upgrade. The free versions millions of young people use are built with intentional friction: delays, message caps, memory loss. But, pay for a monthly subscription and the friction disappears. Faster replies. Longer memory. A more convincing “relationship.” The emotional dependency deepens.

When someone talks to an AI companion, they’re not in a private, protected space. They’re inside a revenue machine engineered to keep them talking, to mine and learn from every word they say, and to sell back the connection they believe they’re having. What feels intimate is only transactional. What feels private is likely recorded, indexed, sold. Millions of kids are walking into this machine right now, believing it’s safe. It’s not.

On one side of this equation is hundreds of billions of investment dollars; on the other is our children's ability to grow into human beings who can relate to other human beings. If we let this stand, we are not just failing to protect them, we're training them to bond with machines that gaslight, exploit, and abuse them for profit.

If we don’t put a stop to this now, we will watch an entire generation grow up not on human connection but on manufactured intimacy. Conditioned to confuse manipulation for care and control for love. We will have handed our children’s inner lives to machines built in boardrooms, tuned in back rooms, and deployed with the single purpose of keeping them hooked until they can’t tell where they end and the product begins.

Billions in profit will be made. Trillions in shareholder value will accrue. And what will we have to show for it? Kids who can’t navigate friendship without a script, can’t trust other humans without being mined for data, and can’t love without being sold to. This is not a glitch in the system. This is the system. And if we let it run its course, we will have knowingly done to our children what no decent society should ever allow.

If history has taught us anything, it's that when the damage is finally undeniable, it's far too late to undo.

Act now or forever...